Low-altitude UAV detection and tracking algorithms in complex backgrounds

-

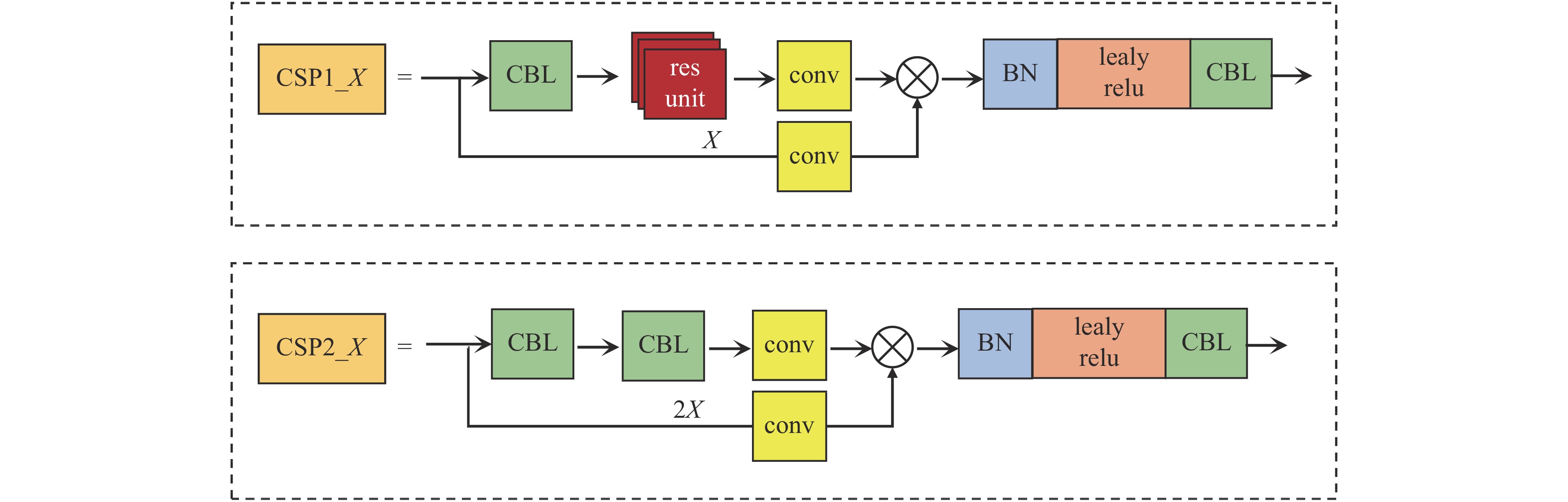

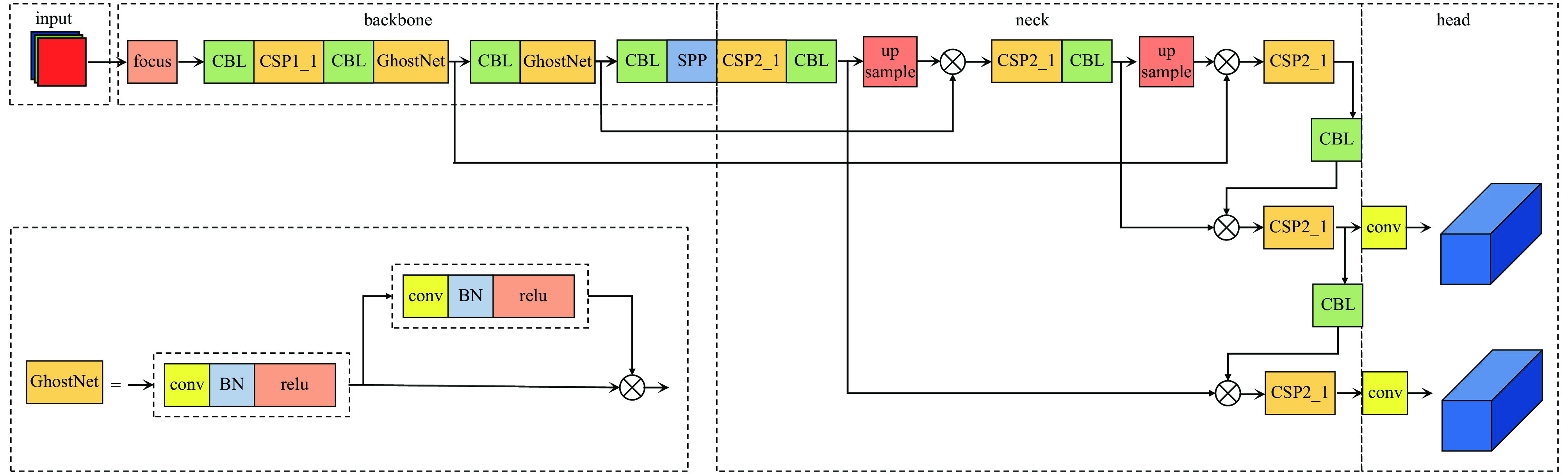

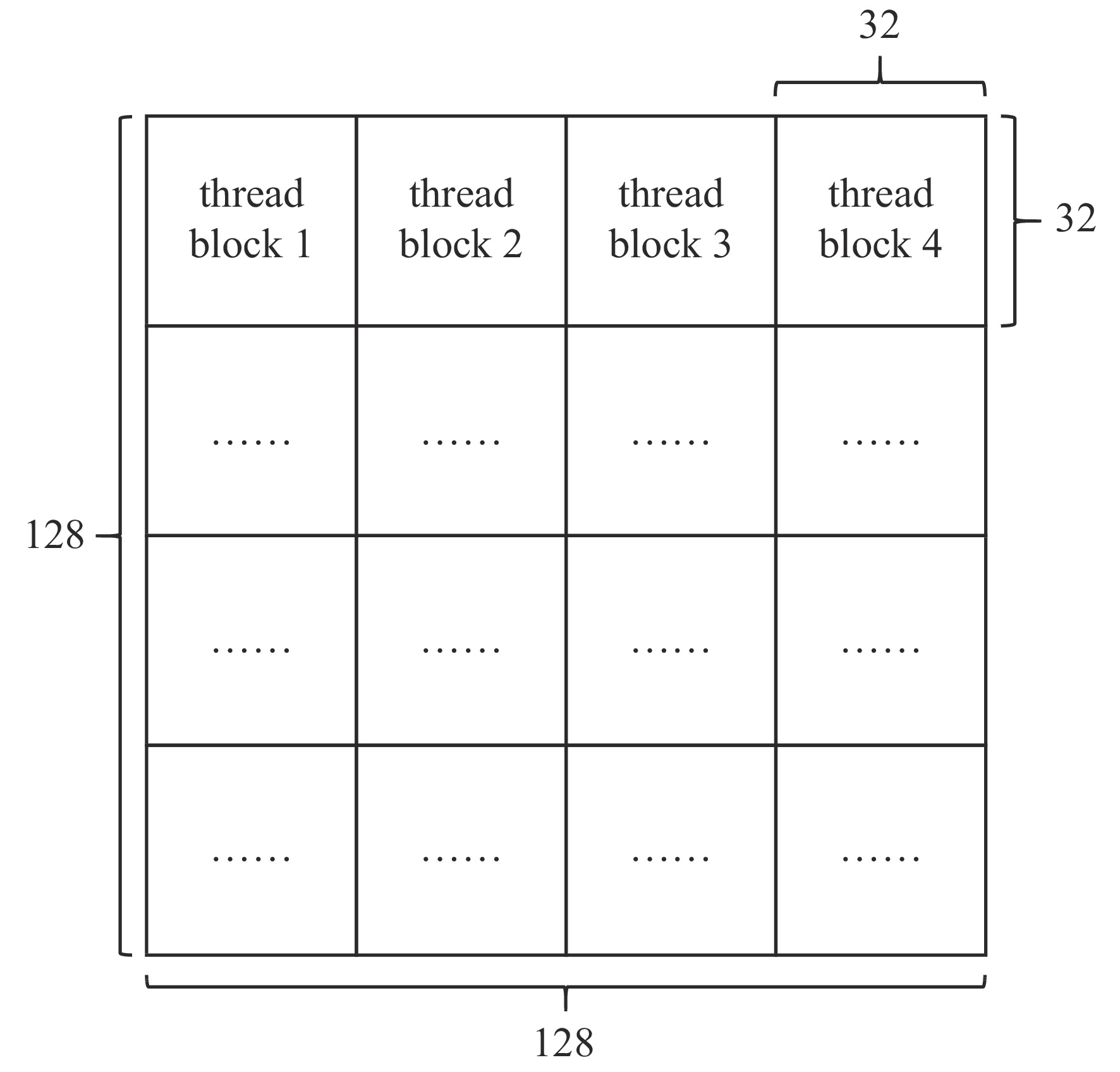

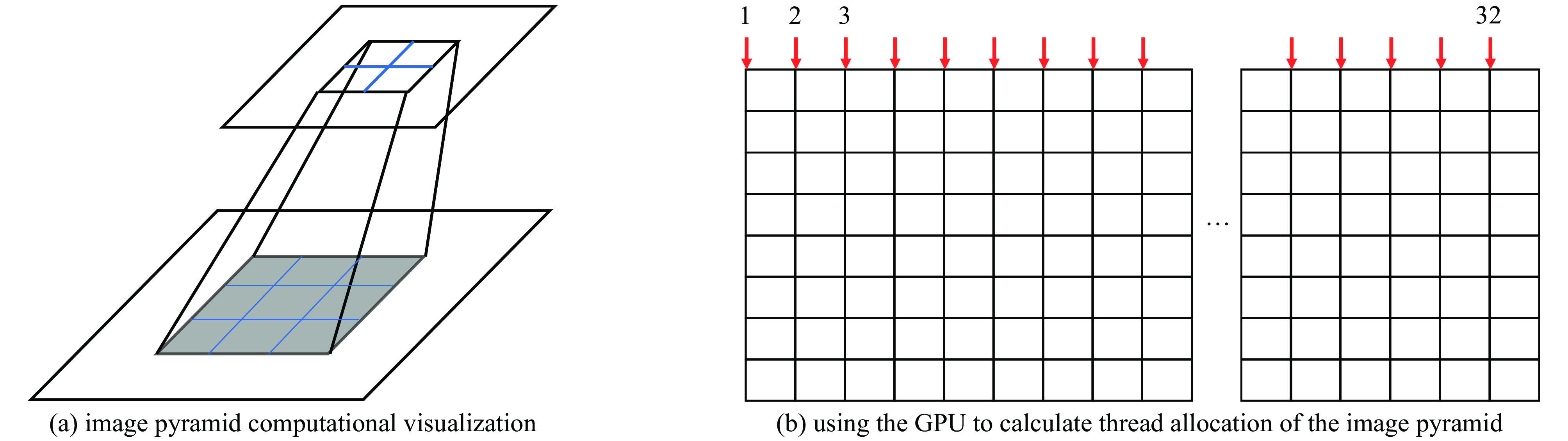

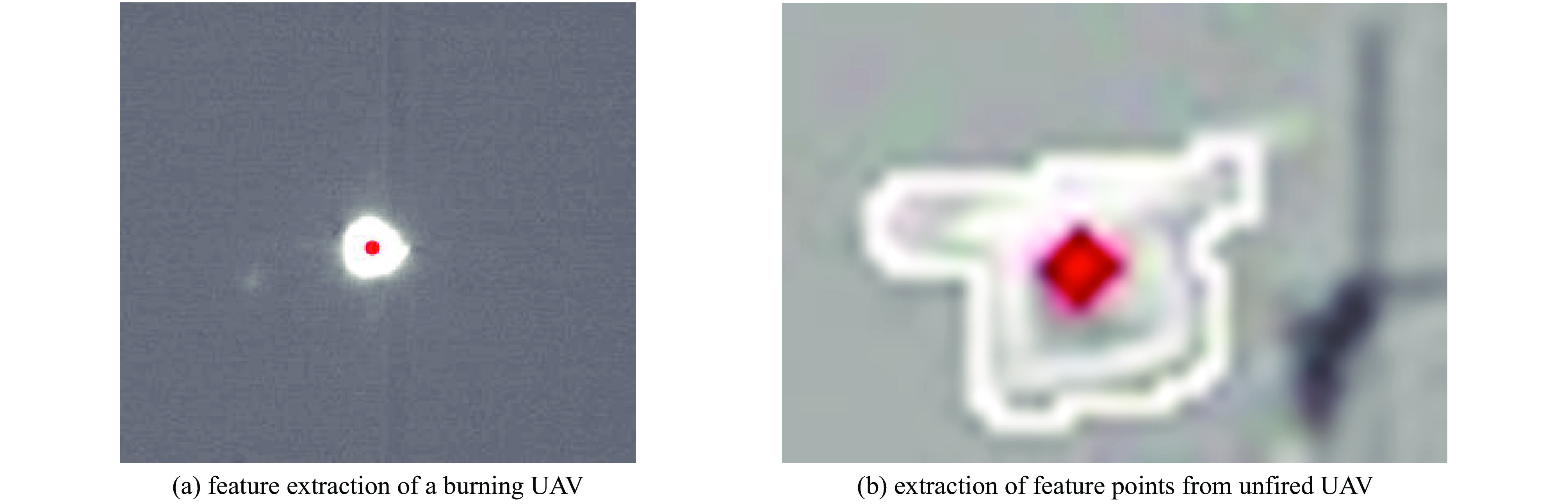

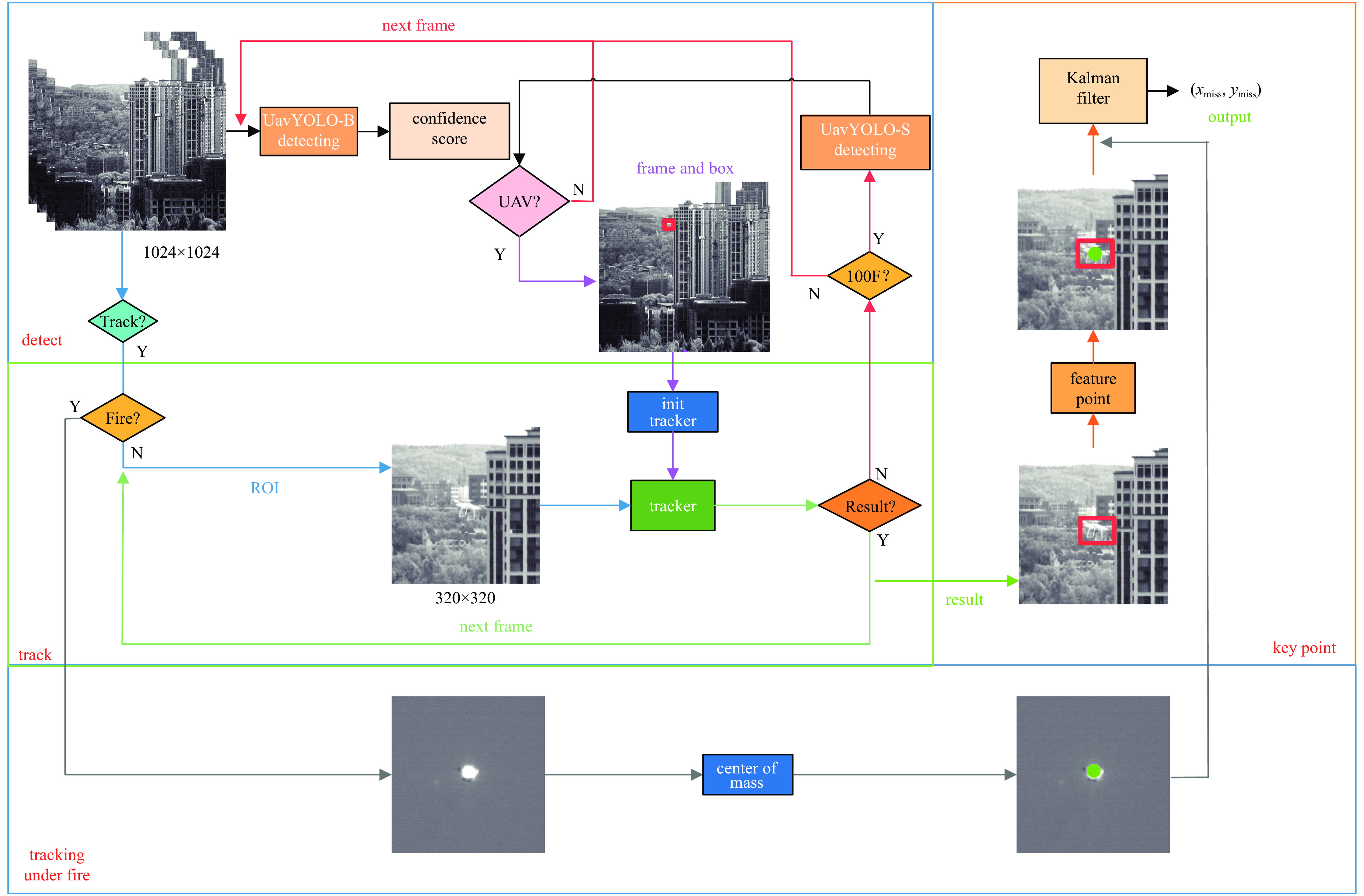

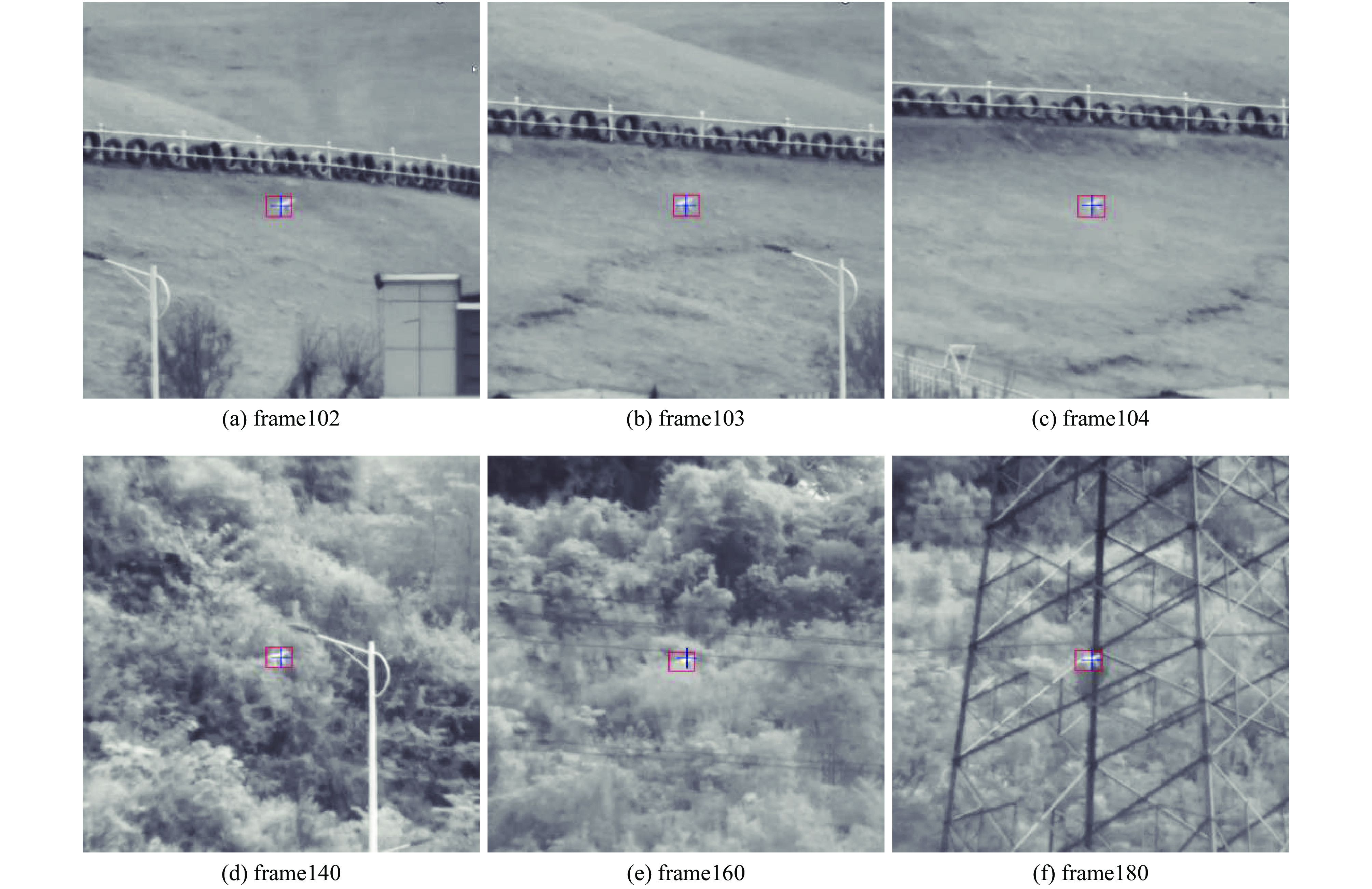

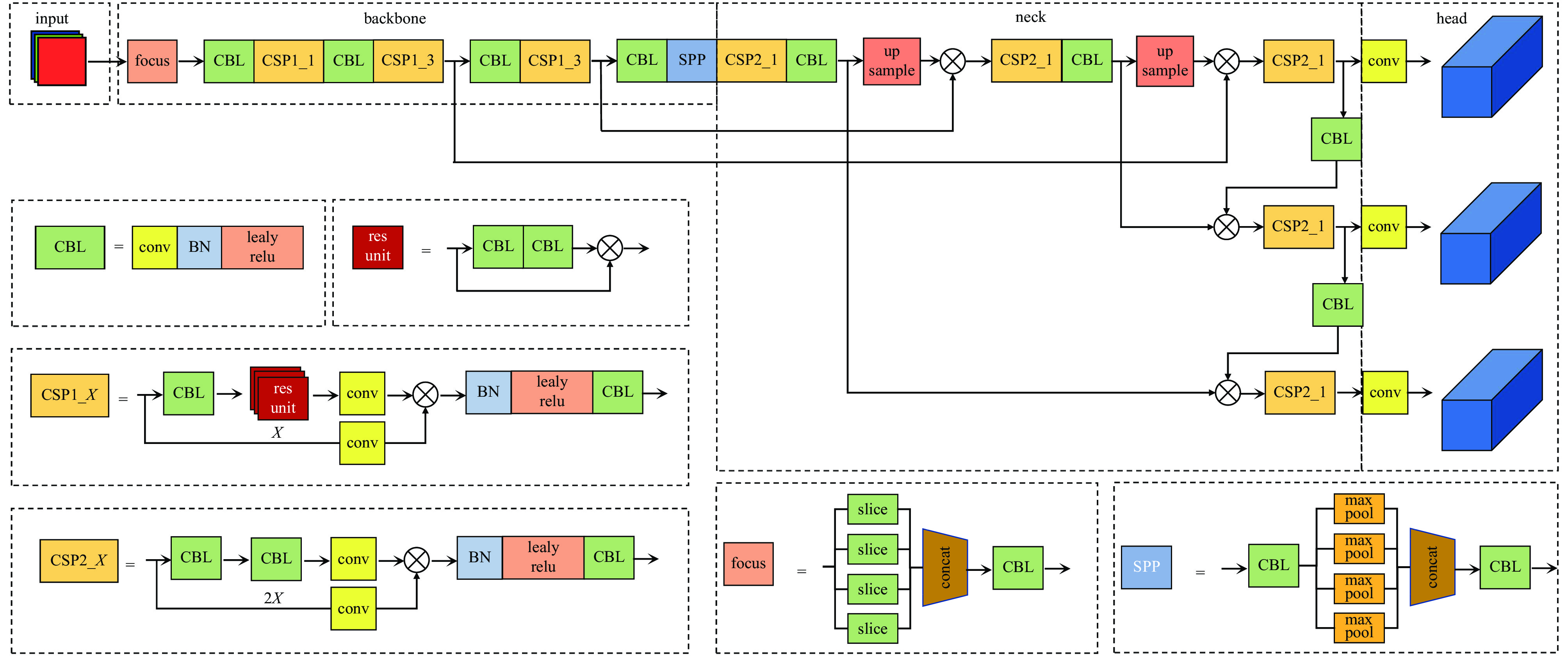

摘要: 提出一种基于YOLOv5与CSRT算法优化的实时长跟踪方法,实现了对无人机在净空、城市、森林等场景的稳定跟踪。针对跟踪的不同阶段建立不同分辨率的两个捕获网络,分别对两个网络进行小目标检测优化和性能优化,并根据无人机数据集特点对其进行正负样本的添加以实现数据增强。然后,对CSRT算法使用GPU进行优化并结合特征点提取构建了低空无人机检测与跟踪模型。最后,将算法使用Tensorrt部署后在自建数据集上进行实验,实验结果表明,所提方法在RTX 2080Ti上实现了400FPS的跟踪性能,在 NVIDIA Jetson NX上实现了70FPS的性能。在实际外场实验中也实现了稳定的长时间跟踪。Abstract: With the frequent appearance of UAVs in several recent local wars and armed conflicts, the study of UAV detection and tracking technology has become a research hotspot in imagery and other fields. Due to the characteristics of low altitude UAV targets such as large mobility, small size, low contrast and complex background, their capture and tracking is a major challenge in the field of photoelectric detection. To address these difficulties, this paper proposes a real-time long tracking method based on YOLOv5 and CSRT algorithm optimization to achieve stable tracking of UAVs in clear sky, urban and forest scenes. First, two capture networks with different resolutions are established for different stages of tracking, and the two networks are optimized for small target detection and performance optimization respectively, and positive and negative samples are added to the UAV data set according to its characteristics to achieve data enhancement. Then, the CSRT algorithm is optimized using GPU and combined with feature point extraction to construct a low-altitude UAV detection and tracking model. Finally, the algorithm is deployed using Tensorrt and experimented on a self-built dataset. The experimental results show that the proposed method achieves a tracking performance of 400FPS on RTX 2080Ti and 70FPS on NVIDIA Jetson NX. Stable long-time tracking is also achieved in real field experiments.

-

Key words:

- drone detection /

- real-time tracking /

- complex background /

- maneuvering target /

- drone countermeasures

-

表 1 不同检测算法性能对比

Table 1. Performance comparison of different detection algorithms

algorithm size of input image/pixel δAP50/% δAP75/% detection speed/(frames·s−1) YOLOv5 1024×1024 86.2 57.8 35 UavYOLO-B 1024×1024 89.1 59.2 25 UavYOLO-S 320×320 88.3 58.5 200 表 2 不同检测算法性能对比

Table 2. Performance comparison of different detection algorithms

algorithm capture accuracy/% miss distance/pixel tracking speed/(frames·s−1) YOLOv5 87.2 10.6 35 UavYOLO-B+UavYOLO-S 93.2 5.4 25 UavYOLO-B+UavYOLO-S+CSRT 97.8 4.8 75 UavYOLO-B+UavYOLO-S+CSRT+ KeyPoints 97.8 1.5 75 -

[1] Klare J, Biallawons O, Cerutti-Maori D. UAV detection with MIMO radar[C]//Proceedings of the 18th International Radar Symposium (IRS). 2017: 1-8. [2] Xiao Yue, Zhang Xuejun. Micro-UAV detection and identification based on radio frequency signature[C]//Proceedings of the 6th International Conference on Systems and Informatics (ICSAI). 2019: 1056-1062. [3] Kim J, Park C, Ahn J, et al. Real-time UAV sound detection and analysis system[C]//Proceedings of 2017 IEEE Sensors Applications Symposium (SAS). 2017: 1-5. [4] Jocher G. v5.0 - YOLOv5-P6 1280 models, AWS, Supervise. ly and YouTube integrations[CP/OL]. [2021-03-12]. https://gitcode.net/mirrors/ultralytics/yolov5/-/releases/v5.0. [5] Lukežic A, Vojír T, Zajc L C, et al. Discriminative correlation filter with channel and spatial reliability[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition. 2017: 6309-6318. [6] Hu Jie, Shen Li, Sun Gang. Squeeze-and-excitation networks[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018: 7132-7141. [7] Han Kai, Wang Yunhe, Tian Qi, et al. GhostNet: more features from cheap operations[C]//Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2020: 1580-1589. [8] van de Weijer J, Schmid C, Verbeek J, et al. Learning color names for real-world applications[J]. IEEE Transactions on Image Processing, 2009, 18(7): 1512-1523. doi: 10.1109/TIP.2009.2019809 [9] Dalal N, Triggs B. Histograms of oriented gradients for human detection[C]//Proceedings of 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2005: 886-893. [10] Lowe D G. Object recognition from local scale-invariant features[C]//Proceedings of the Seventh IEEE International Conference on Computer Vision. 1999: 1150-1157. [11] Viola P, Jones M. Rapid object detection using a boosted cascade of simple features[C]//Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2001. [12] Redmon J, Divvala S, Girshick R, et al. You only look once: unified, real-time object detection[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition. 2016: 779-788. [13] Liu Wei, Anguelov D, Erhan D, et al. SSD: single shot MultiBox detector[C]//Proceedings of the 14th European Conference on Computer Vision. 2016: 21-37. [14] Santad T, Silapasupphakornwong P, Choensawat W, et al. Application of YOLO deep learning model for real time abandoned baggage detection[C]//Proceedings of 2018 IEEE 7th Global Conference on Consumer Electronics. 2018: 157-158. [15] Dos Reis D H, Welfer D, De Souza Leite Cuadros M A, et al. Mobile robot navigation using an object recognition software with RGBD images and the YOLO algorithm[J]. Applied Artificial Intelligence, 2019, 33(14): 1290-1305. doi: 10.1080/08839514.2019.1684778 [16] Mei Xue, Ling Haibin. Robust visual tracking and vehicle classification via sparse representation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2011, 33(11): 2259-2272. doi: 10.1109/TPAMI.2011.66 [17] Wang Naiyan, Wang Jingdong, Yeung D Y. Online robust non-negative dictionary learning for visual tracking[C]//Proceedings of 2013 IEEE International Conference on Computer Vision. 2013: 657-664. [18] Held D, Thrun S, Savarese S. Learning to track at 100 FPS with deep regression networks[C]//Proceedings of the 14th European Conference on Computer Vision. 2016: 749-765. [19] Hare S, Golodetz S, Saffari A, et al. Struck: structured output tracking with kernels[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(10): 2096-2109. doi: 10.1109/TPAMI.2015.2509974 [20] 葛宝义, 左宪章, 胡永江. 视觉目标跟踪方法研究综述[J]. 中国图象图形学报, 2018, 23(8):1091-1107 doi: 10.11834/jig.170604Ge Baoyi, Zuo Xianzhang, Hu Yongjiang. Review of visual object tracking technology[J]. Journal of Image and Graphics, 2018, 23(8): 1091-1107 doi: 10.11834/jig.170604 [21] Tao Ran, Gavves E, Smeulders A W M. Siamese instance search for tracking[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition. 2016: 1420-1429. [22] Song Yibing, Ma Chao, Wu Xiaohe, et al. VITAL: visual tracking via adversarial learning[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2018: 8990-8999. [23] MacQueen J B. Some methods for classification and analysis of multivariate observations[C]//Proceedings of the 5th Berkeley Symposium on Mathematical Statistics and Probability. 1967: 281-297. [24] Otsu N. A threshold selection method from gray-level histograms[J]. IEEE Transactions on Systems, Man, and Cybernetics, 1979, 9(1): 62-66. doi: 10.1109/TSMC.1979.4310076 -

下载:

下载: